EdgeImpulse - Analytics trained in the cloud, deployed on the edge

My journey of enabling edgeimpulse on a custom TI board

In the past few months, I have worked on the analytics software stack for the Texas Instruments (TI) new-gen SoC. This was my first foray into the world of machine learning and artificial intelligence. I got the chance to learn a lot in this domain. Last month TI organized a hackathon, where I was part of the team that enabled EdgeImpulse on the TDA4 platform. In this article, I will walk you through my journey on this project. Honestly, even if you don’t know anything about machine learning, I suggest going through this blog post to get the basics of what it takes to develop an ML application.

What is edgeimpulse?

It’s a platform for developing machine learning applications for embedded devices. This platform was launched in 2020 and since then, getting fairly popular due to the ease to use of their services.

Users can collect data from a laptop camera, embedded device, or even a smartphone. It has a nice user interface for labeling the data in the cloud. You can train and test various models and analyze the performance in their studio. At last, it also gives you a readymade solution to deploy the final application binary without any external dependencies. The website offers APIs to integrate these services and build your custom applications.

What is TDA4VM SoC?

TDA4VM is a new generation chip from Texas Instruments that is targeted for analytics applications. It’s a multicore SoC with a dedicated deep learning accelerator for running analytics algorithms. It has a rich set of peripherals for connectivity, storage, multimedia, graphics, security, etc

Amongst others, it can also run Linux operating system with all the driver support for camera, display, networking, using standard Linux interfaces. The software supports a variety of analytics runtimes including TensorFlow Lite, ONNX, TVM/Neo-AI-DLR, etc.

Problem statement

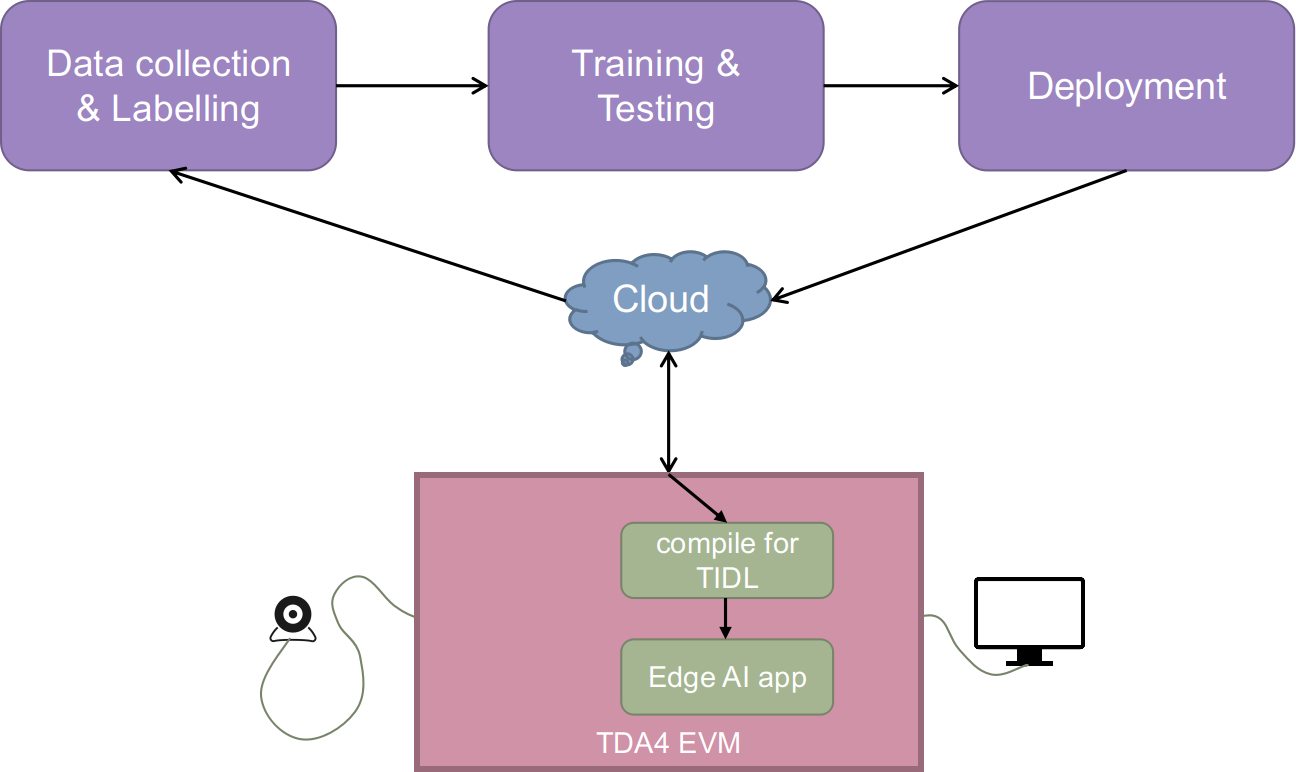

For the hackathon, we wanted to enable the edge impulse on the TDA4VM platform and also integrate the TI software to run the demo with deep learning acceleration. This meant first understanding their flow and then integrating the generated models with TI deep learning libraries. Here is a simple block diagram explaining the data flow:

Our goal was to showcase how easy it would be for someone to train using cloud technologies and deploy them onto TI’s performance-optimized embedded platforms. Analytics trained in the cloud, deployed on edge

For this, we intended to create a demonstration where we started with training an object detection model in the cloud and deployed it on the TI board. This demo would capture video from the camera, detect the trained objects and visualize the analytics (aka inference) operation along with some performance measurement.

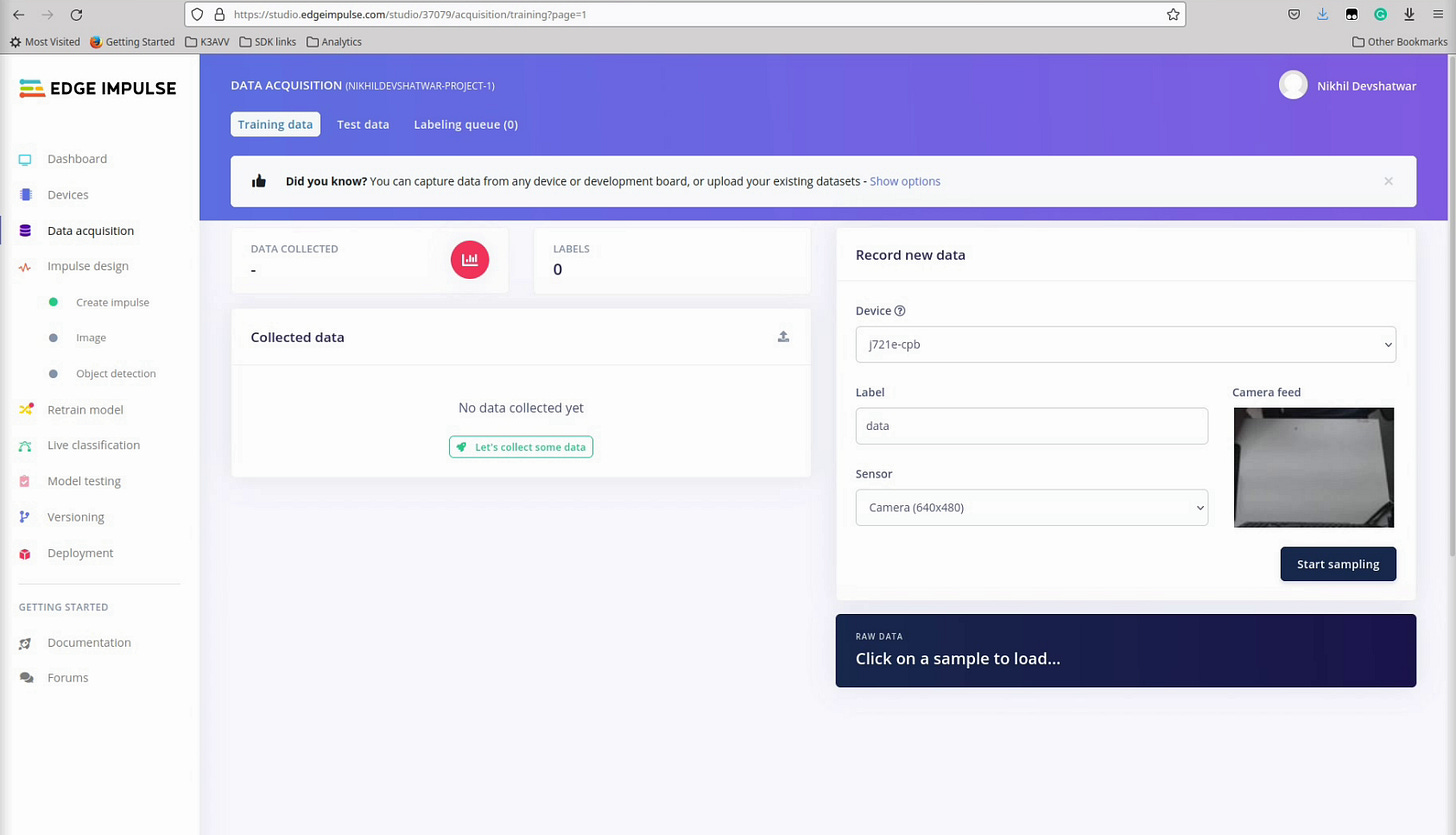

Data collection from TDA4 EVM

We created an account on edgeimpulse.com and tried to connect the TDA4 EVM to the cloud. TI Linux SDK filesystem has nodejs, Gstreamer, and V4L2 compliant video capture drivers. Thanks to the open-source software, I was able to connect live camera data from my board to the cloud within just a few minutes of setup.

$ npm install -g --unsafe-perm edge-impulse-linux $ edge-impulse-linux Edge Impulse Linux client v1.2.6 [SER] Using microphone hw:0,0 [SER] Using camera C922 Pro Stream Webcam starting... [SER] Connected to camera [WS ] Device "j721e-cpb" is now connected to project

This application used nodejs and Gstreamer to connect my camera to the cloud and from the edgeimpulse studio, I could see the live camera feed from my board. I had worked on Gstremer applications, I was not aware that there were nodejs packages for that as well. I respect Gstreamer even more now! Here is the snapshot of the live camera feed:

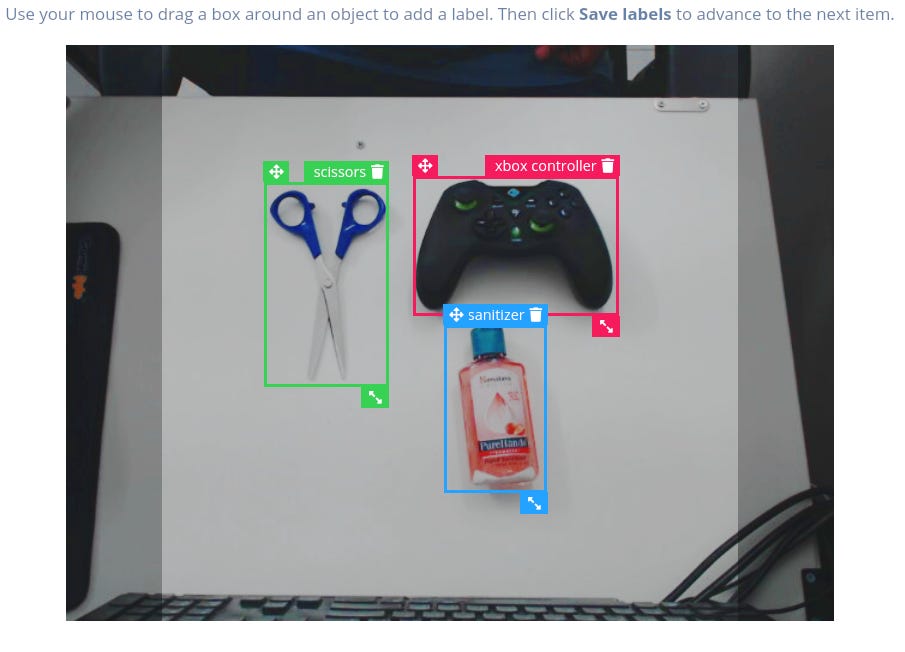

This was the only app I had to run on the board, and everything after that happened in the edge impulse studio. We captured a few sets of images for training and testing. We picked few daily life objects like Sanitizer, measuring tape, remote and Xbox controller, etc. The next step was to draw bounding boxes around all objects and add labels to them. Later on, this data will be used to train the model on how to detect these objects.

We used some household items like scissors, sanitizer, and an Xbox controller to train the object detection model. We collected about 50-60 images for this demo and labeled them appropriately.

Training and testing

The edge impulse studio supports a few readymade models and also allows you to upload a custom model as well. We picked the mobilenetV2 SSD model for object detection. The dataset was rebalanced between training and test data and we started the training with default parameters.

All the training process happened on cloud so there were no additional dependencies required for the tensorflow, python packages, etc

Deploying on the target

The edgeimpulse studio allows deploying the final trained model on the target machine from the cloud. It compiles the model using the EON compiler and deploys the final binary on the target. All the dependencies of the runtimes are packaged within the binary itself, so there are no more dependencies required to run the binary offline. A simple command like the following can be used to start the object detection program using the trained model.

root@j7-evm:~# edge-impulse-linux-runner Edge Impulse Linux runner v1.2.6 [RUN] Already have model, not downloading... [RUN] Starting the object detection [RUN] Parameters image size 320x320 px (3 channels) classes [ 'scissors', 'sanitizer', 'tape', 'xbox controller' ] [RUN] Using camera C922 Pro Stream Webcam starting... [RUN] Connected to camera Want to see a feed of the camera and live classification in your browser? Go to http://192.168.0.114:4912

And with that ease, we were able to run a cloud-trained model on the TDA4 EVM. The application used a USB camera and hosted a webserver to showcase the analytics visualization in the browser. Here you can see the live object detection in action:

Accelerating the solution

Yes, we got the prototype done in few hours. Now it was time to add some differentiation for TI SoC. The default binary deployed by edgeimpulse assumes no hardware accelerator for running the analytics model, but the TDA4 SoC has a dedicated AI accelerator which would significantly improve the performance. We had mostly used the infrastructure from edgeimpulse studio till now. Now we wanted to add TI sauce to boost the performance of this demo while keeping ease-of-use as the primary focus.

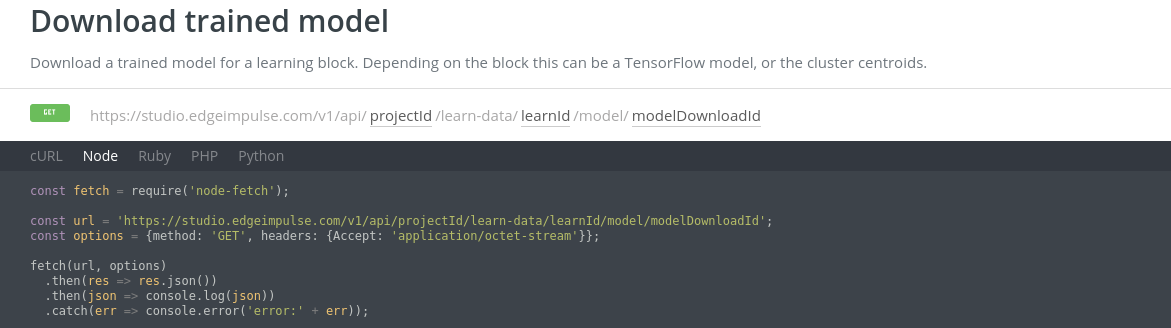

To be able to run the same demo with acceleration, we needed to get access to the model that is trained on the cloud. Then we can compile it such that it uses the TI deep learning (TIDL) accelerator using the delegate API. Additionally, there was a task of getting access to all the metadata like the labels, etc associated with the model.

As shown in the above block diagram, to accelerate the inference step, the deployment step is slightly different. In this flow, the TFlite model is downloaded from the cloud and compiled for TI’s deep learning accelerator. The final binary uses edge_ai_app which does the visualization on a display connected to the EVM. As expected, the performance was significantly improved compared to the previous demo.

Automating the deployment flow

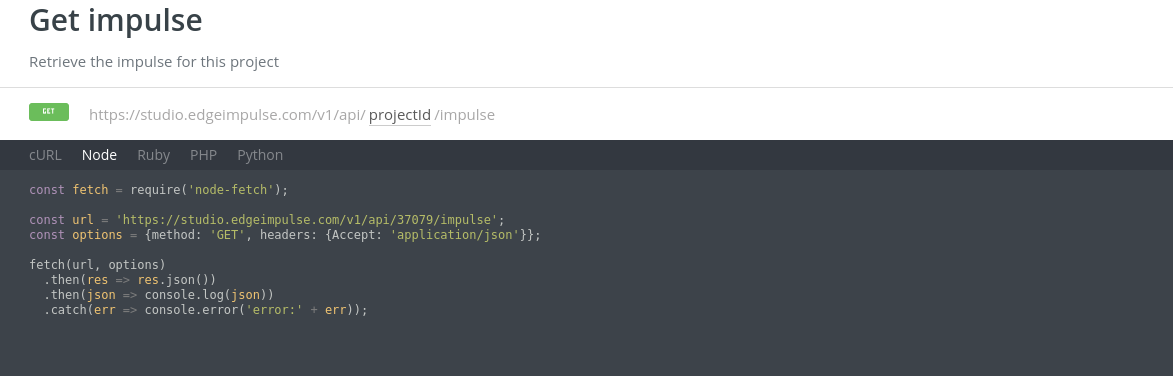

The edgeimpulse studio also provides web APIs for using a variety of services. I was tasked to hook up these APIs with the TIDL compilation on the target. I was able to apply my nodejs knowledge from hobby projects and created a simple script to automate the deployment flow. Here are some of the REST APIs I used for getting the model and metadata on the target:

I created a custom deployment script, which would download the TFlite model, compile it and generate a binary that uses TIDL for offloading the inference (object detection) operation. So now, instead of running the edge-impulse-runner application, the user has to run edge_ai_app to run the same model.

The Edge AI devkit is designed considering ease-of-use in mind. So running a different binary was an even better experience for users. It supports VScode projects which allow line-by-line debugging of C++ and python demo apps in the IDE, making it even simpler to do embedded development.

Conclusion

Unfortunately, I cannot disclose the performance numbers just yet. But there was a significant improvement in the time to do inference using the TI deep learning accelerator. We were able to successfully deliver the following message. For the common hardware like camera, display, etc, we should embrace open source software ecosystem. Focus on enabling differentiated hardware like analytics via custom software components which allows seamless integration with other open source software.

Here are my learnings from this project:

Having V4L2 compliant driver and other open-source components helped us to quickly enable the demo on this device.

One can leverage platforms like edgeimpulse for prototyping of the machine learning applications with TI platforms.

Till now the model training process has been complicated, edgeimpulse makes it so easy that anyone without knowledge of machine learning can do it in hours.

I hope you learned few things from this article as well.

References

edgeimpulse https://www.edgeimpulse.com/

TI TDA4 SoC https://www.ti.com/product/TDA4VM

Deep Learning https://en.wikipedia.org/wiki/Deep_learning

TensorFlow lite https://www.tensorflow.org/lite

edgeimpulse API https://docs.edgeimpulse.com/reference

I would really appreciate if you subscribe to the newsletter. You’ll receive an email twice a month with new articles from my newsletter.